Berkeley Lab scientists are developing new AI models to push the boundaries of science, and applying AI to make discoveries in biology, physics, clean energy, climate, materials, and more.

Demonstrating the power of the MACE-MP-0 model and its qualitative and quantitative accuracy on a diverse set of problems in the physical sciences, including the properties of solids, liquids, gases, chemical reactions, interfaces, and even the dynamics of a small protein.

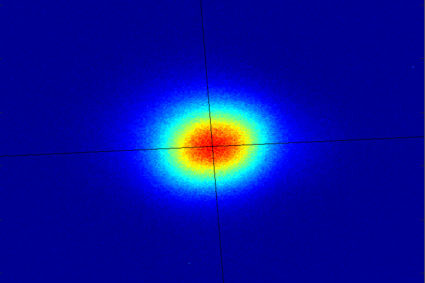

Training neural networks to allow for a novel feed forward which increases source size stability by up to an order of magnitude compared to conventional physics model-based approaches.

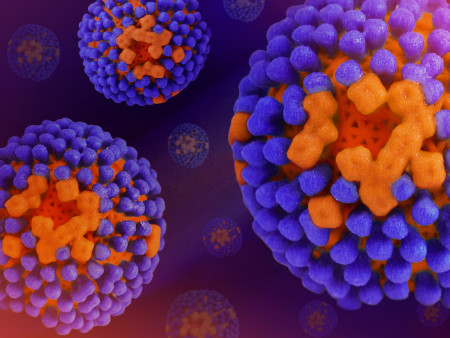

Developing a generative pre-trained AI model to enhance the functional properties of proteins for biomanufacturing and to advance self-driving labs for synthetic biology.

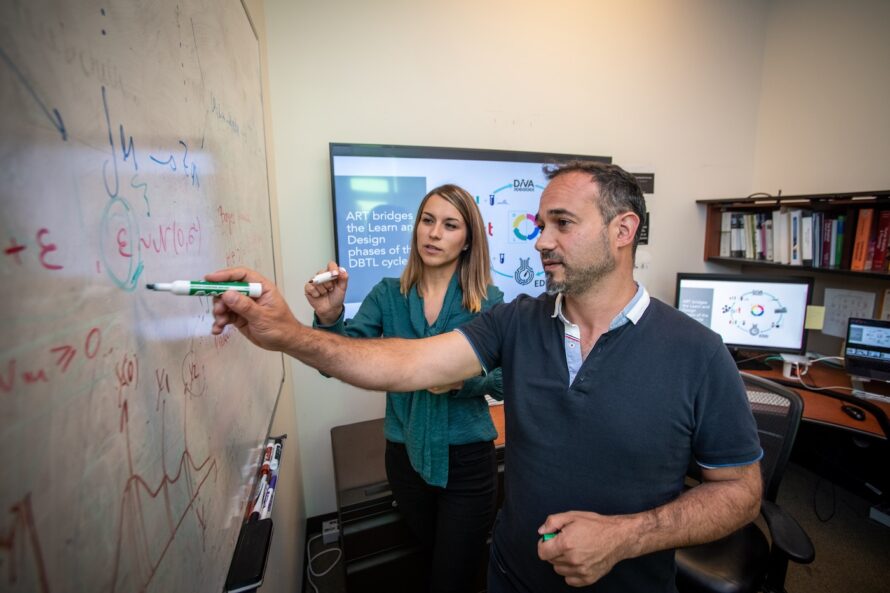

CAMERA is an integrated, cross-disciplinary center that aims to invent, develop, and deliver the fundamental new mathematics required to capitalize on experimental investigations at scientific facilities.

This project investigates the many connections between data-driven and science-driven generative models.

Next-generation Gaussian (and Gaussian-related) process engine for flexible, domain-informed and HPC-ready stochastic function approximation.

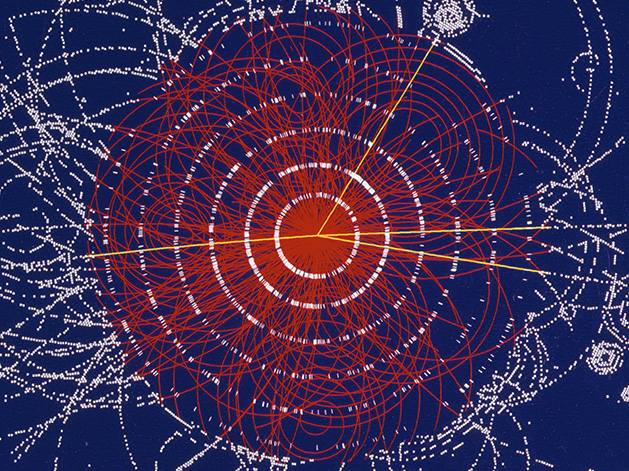

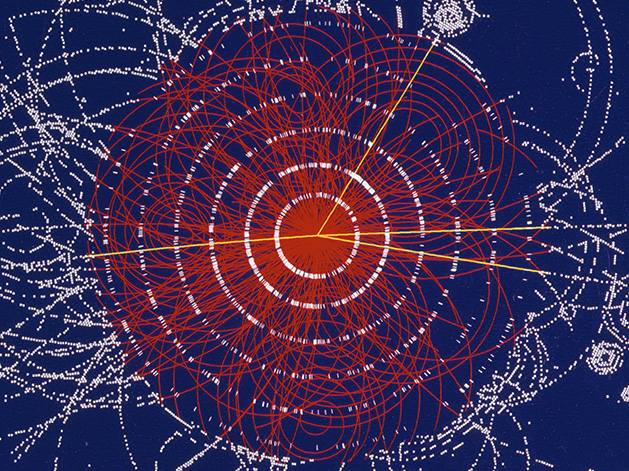

A collaboration of data scientists and computational physicists developing graph neural networks models aimed at reconstructing millions of particle trajectories per second from petabytes of raw data produced by the next generation of particle tracking detectors at the energy and intensity frontiers.

Exploring how pre-trained ML could be used for scientific ML (SciML) applications, specifically in the context of transfer learning.

FourCastNet, short for Fourier Forecasting Neural Network, is a global data-driven weather forecasting model that provides accurate short to medium-range global predictions at high resolution.

Developing AI models that generalize across different chemical systems and are trained on large datasets, aiding in more accurate and efficient predictions in the field of materials science.

The gpCAM project consists of an API and software designed to make autonomous data acquisition and analysis for experiments and simulations faster, simpler, and more widely available by leveraging active learning.

An optimization algorithm specialized in finding a diverse set of optima, alleviating challenges of non-uniqueness that are common in modern applications.

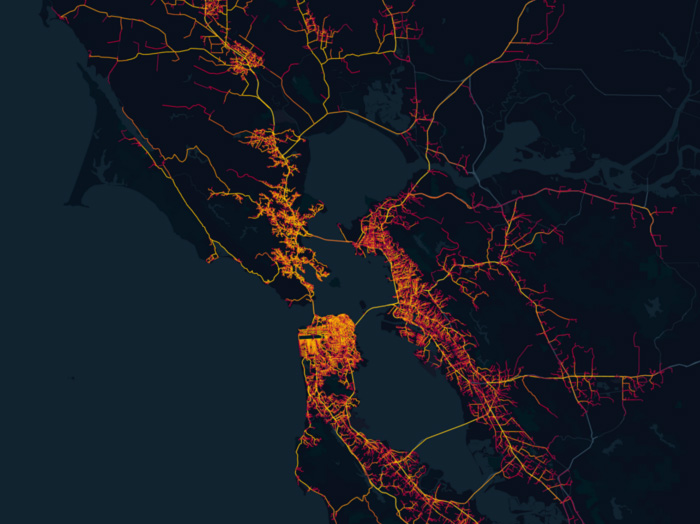

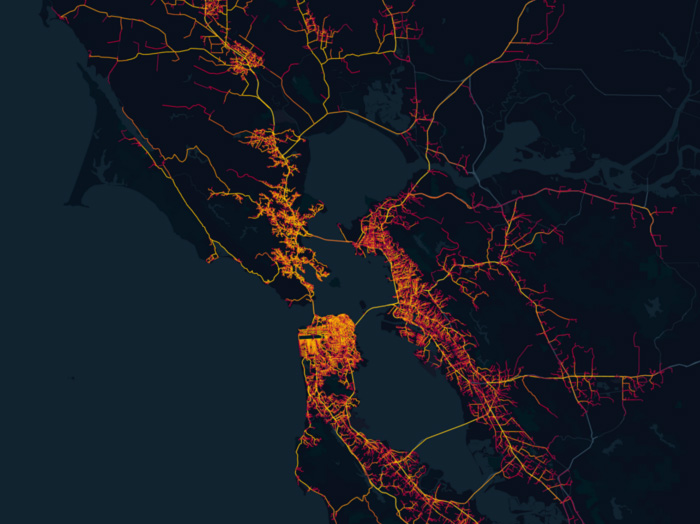

The goal of HAYSTAC is to develop a generative model that produces complete trajectories of stay locations given sparse Location-Based Service (LBS) data.

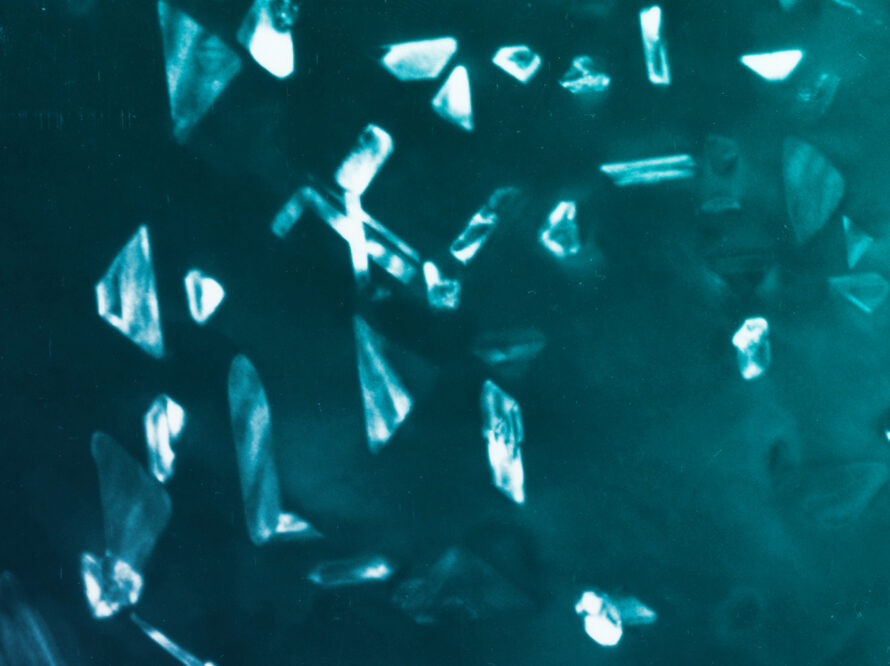

Supported by a U.S. DOE Early Career Award, IDEAL focuses on computer vision and ML algorithms and software to enable timely interpretation of experimental data recorded as 2D or multispectral images.

Using AI combined with network virtualization to support complex end-to-end network connectivity from edge 5G sensors to supercomputing facilities.

Developing principled numerical analysis methods to validate models for science and engineering applications.

Designing a differentiable neural network layer to enforce physical laws and demonstrate that it can solve many problem instances of parameterized partial differential equations (PDEs) efficiently and accurately.

Developing new AI methods to integrate Small-angle X-ray scattering (SAXS) data from the Advanced Light Source (ALS) with AlphaFold’s AI-based protein structure prediction to identify physiologically representative protein conformations.

Developing secure AI/ML tools to both detect and mitigate cyber attacks on aggregations of Distributed Energy Resources (DER) in electric power distribution systems and microgrids.

Collaboration shows how machine learning methods can enhance the prognosis and understanding of traumatic brain injury (TBI).

Cutting-edge software system that accurately simulates the movement of an entire population through a region’s road networks.

New deep learning based on U-net, Y-net, and viTransformers for detection and segmentation of defects in lithium metal batteries to expand the e-vehicle fleet.

An open-source tool for generating accurate algebraic surrogates that are directly integrated with an equation-oriented optimization platform, providing a breadth of capabilities suitable for a variety of engineering applications.

Developing innovative machine learning tools to pull contextual information from scientific datasets and automatically generate metadata tags for each file.

Using statistical mechanics to interpret how popular machine learning algorithms behave, give users more control over these systems, and enable them to reach the results faster.

Enabling a faster and more precise topological regularization.

Turning text data into information that helps to identify key topics within certain science domains.

Developing an ML model that predicts whether a newly proposed chemical synthesis based on its composition will be charged balanced to assist researchers in validating their synthesis plans.

Developing novel visualization methods to improve our understanding of scientific ML models.

WaveCastNet is a novel AI-enabled framework for forecasting ground motions from large earthquakes.

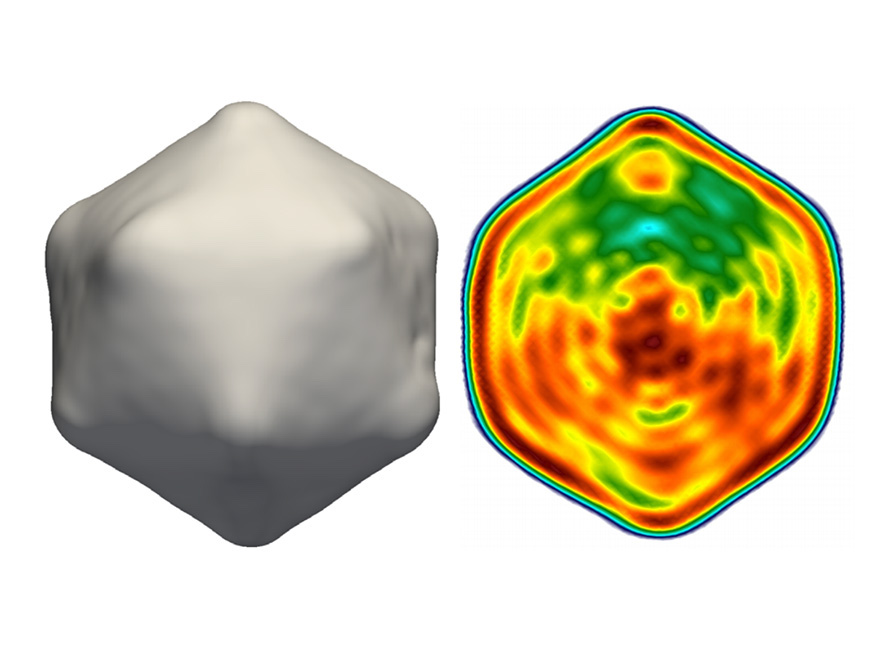

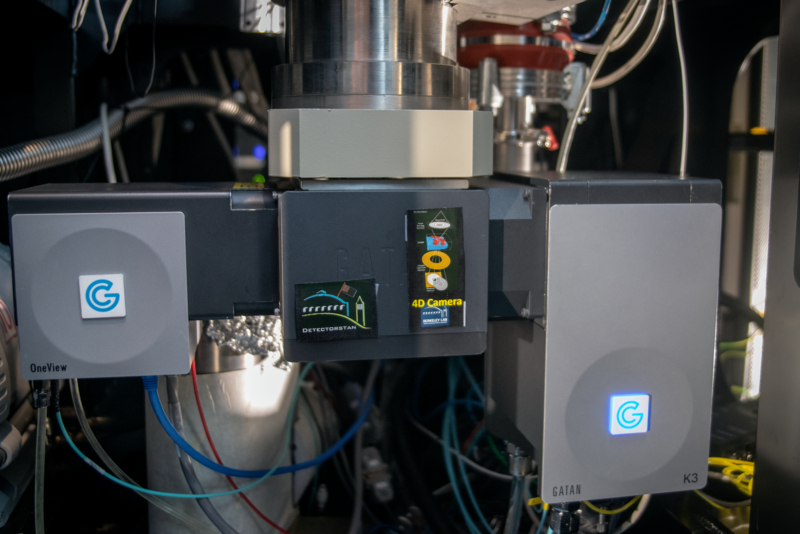

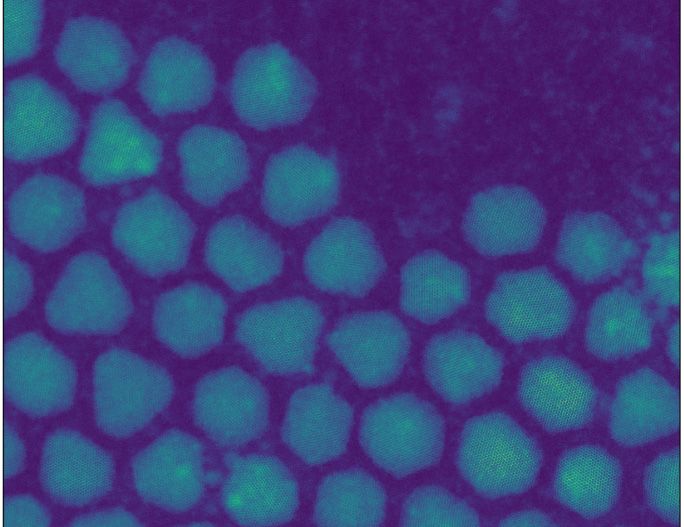

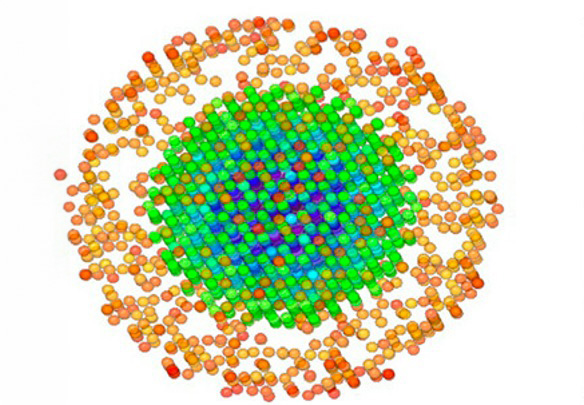

Develop and deploy methods and tools based on AI and ML to analyze electron scattering information from the data streams of fast direct electron detectors.

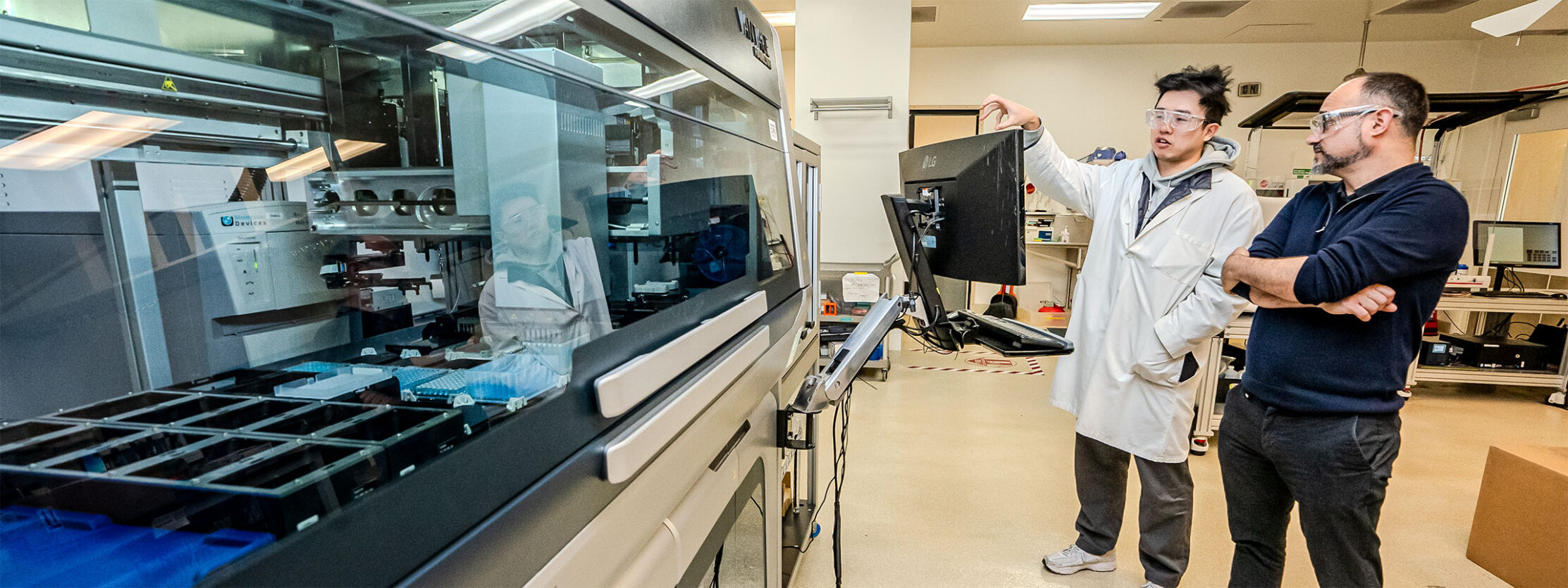

To accelerate development of useful new materials, researchers have developed a new kind of automated lab that uses robots guided by artificial intelligence.

Applying ML to atomic-scale images to extract the relationship between strain and composition in a battery material, paving the way for more durable batteries.

Harnessing the game-changing power of AI/ML for both modeling and control of particle accelerators.

Powering the next generation of nuclear physics discoveries with ML.

Opportunities for a modern grid and clean energy economy through the power of AI.

Approaching fundamental physics challenges through the lens of modern ML.

Bringing together molecular biology, biogeochemistry, environmental sensing technologies, and ML to help revolutionize agriculture and create sustainable farming practices that benefit both the environment and farms.

DuraMAT uses advanced data analytics to more accurately pinpoint photovoltaic (PV) module degradation and isolate its causes.

This project aims to develop new stochastic process-based mathematical and computational methods to achieve high-quality, domain-aware function approximation, uncertainty quantification, and, by extension, autonomous experimentation.

A flexible pipeline-based system for high-throughput acquisition of atomic-resolution structural data using an all-piezo sample stage applied to large-scale imaging of nanoparticles and multimodal data acquisition.

Berkeley Biomedical Data Science Center (BBDS) is a central hub of research at Lawrence Berkeley National Laboratory designed to facilitate and nurture data-intensive biomedical science.

Developing AI-based methods for predicting the occurrence of low-likelihood, high-impact climate extremes that are missed by traditional weather predictions.

Exploring new physics leading to higher energy efficiency in computing.

Using deep neural networks to reconstruct important hydrodynamical quantities from coarse or N-body-only simulations, vastly reducing the amount of compute resources required to generate high-fidelity realizations while still providing accurate estimates with realistic statistical properties.

Developing automated approaches to determine building characteristics, and retrofit and operational efficiency opportunities.

Developing a data-driven approach to synthesis science by combining text mining and ML, in situ and ex situ characterization of experimental synthesis, and large-scale first-principles modeling.

Deep learning approaches to detect parking lot locations using satellite imagery datasets.

Next-generation Gaussian (and Gaussian-related) process engine for flexible, domain-informed and HPC-ready stochastic function approximation.

Using ML, data sciences, informatics, and data management to advance state-of-the-art Earth science observations, modeling, and theory.

Enhancing utilities operation during heat waves by developing new models to estimate hours-ahead electricity demand, flexibility of aggregated building stocks and overheating risks of vulnerable communities during heat waves.

A collaboration of data scientists and computational physicists developing graph neural networks models aimed at reconstructing millions of particle trajectories per second from petabytes of raw data produced by the next generation of particle tracking detectors at the energy and intensity frontiers.

Developing an exascale-ready agent-based epidemiological model that can speed predictions of disease spread.

Using leadership-class computers, big data, and machine learning – combined in learning-assisted physics-based simulation tools – to fundamentally change how watershed function is understood and predicted.

The FAIR Universe project is developing and sharing datasets, training frameworks, and data challenges and benchmarks to facilitate common development and standardization, all with a focus on uncertainty-aware training.

Improving bio-based product and fuel development through adaptive technoeconomic and performance modeling.

An open-source fire spread simulation framework that trains semi-empirical fire behavior model output data using ML and provides the learned logic into a cellular automata simulator to simulate fire spread.

Using ML to accelerate the discovery of novel UCNPs while domain-specific knowledge is being developed.

An optimization algorithm specialized in finding a diverse set of optima, alleviating challenges of non-uniqueness that are common in modern applications.

New statistical-modeling workflow may help advance drug discovery and synthetic chemistry.

A next generation multi-scale modeling & optimization framework to support the U.S. power industry.

Over the next decade, the La Silla Schmidt Survey (LS4) will leverage an automated pipeline to uncover transient sky events in the Southern Hemisphere.

Using AI combined with network virtualization to support complex end-to-end network connectivity from edge 5G sensors to supercomputing facilities.

Enhancing particle reconstruction by harnessing the power of language models.

Applying ML methods to predict the macroscopic fundamental diagrams (MFD) across U.S. urban areas and capture the impacts of location-specific input features on the network flow-density relationships at a large scale.

Developing new AI methods to integrate Small-angle X-ray scattering (SAXS) data from the Advanced Light Source (ALS) with AlphaFold’s AI-based protein structure prediction to identify physiologically representative protein conformations.

Developing secure AI/ML tools to both detect and mitigate cyber attacks on aggregations of Distributed Energy Resources (DER) in electric power distribution systems and microgrids.

Collaboration shows how machine learning methods can enhance the prognosis and understanding of traumatic brain injury (TBI).

Developing a dynamic vehicle transaction model to fully evolve households and their vehicle fleet composition and usage over time for forecasting vehicle technology adoptions in the U.S.

AI software gleans insights from health records to shed light on chronic COVID symptoms.

Berkeley Lab scientists developed a new tool that adapts ML algorithms to the needs of synthetic biology to guide development systematically.

Developing a suite of tools aimed at lowering the barriers of access to advanced data processing for all users.

MLExchange is a shared platform that lowers the barrier to entry by leveraging advances in ML methods across user facilities, thus empowering domain scientists and data scientists to discover new information using existing and new data with novel tools.

MLPerf HPC is a machine learning performance benchmark suite for scientific ML workloads on large supercomputers.

Cutting-edge software system that accurately simulates the movement of an entire population through a region’s road networks.

Predicting optimal electrode materials with high activity for aqueous electrochemical selenite and selenate reduction.

Providing computational and modeling solutions to optimize the performance, energy use, and economic cost of existing and developing water treatment processes and infrastructures.

New deep learning based on U-net, Y-net, and viTransformers for detection and segmentation of defects in lithium metal batteries to expand the e-vehicle fleet.

Developing fast Bayesian statistical analysis methods for scientific data analysis that can be applied to a wide range of scientific domains and problems.

Developing a software platform to allow utilities to share relevant cybersecurity information with one another in a manner that does not compromise the privacy of customers in their service territories.

A resource for accelerating the identification, design, scaleup, and integration of innovative rare earth elements and critical processes.

An open-source, optimization-based, downloadable and executable produced water decision-support application for produced water management and beneficial reuse.

An open-source tool for generating accurate algebraic surrogates that are directly integrated with an equation-oriented optimization platform, providing a breadth of capabilities suitable for a variety of engineering applications.

This project explores approaches for developing and validating reliable algorithms for real-time computing at the scientific edge.

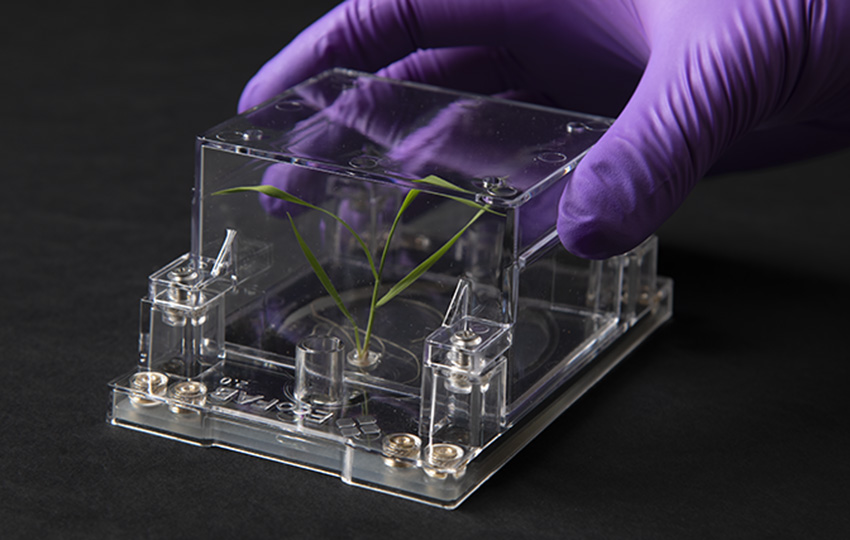

Harnessing the power of AI to study plant roots, offering new insights into root behavior under various environmental conditions.

Sky surveys for downstream tasks like morphology classification, redshift estimation, similarity search, and detection of rare events, paving new pathways for scientific discovery.

The Perlmutter system is a world-leading AI supercomputer consisting of over 6,000 NVIDIA A100 GPUs, an all-flash filesystem, and a novel high-speed network.

Turning text data into information that helps to identify key topics within certain science domains.

A Fourier space, complex-valued deep-neural network, FCU-Net, to invert highly nonlinear electron diffraction patterns into the corresponding quantitative structure factor images.

WaveCastNet is a novel AI-enabled framework for forecasting ground motions from large earthquakes.

Artificial intelligence is bringing transformative solutions to complex scientific challenges. Through advanced computation, network facilities, and data integration, Berkeley Lab is advancing the foundations of powerful new AI capabilities and using AI for discoveries in materials, energy, chemistry, physics, biology, climate science, and more.